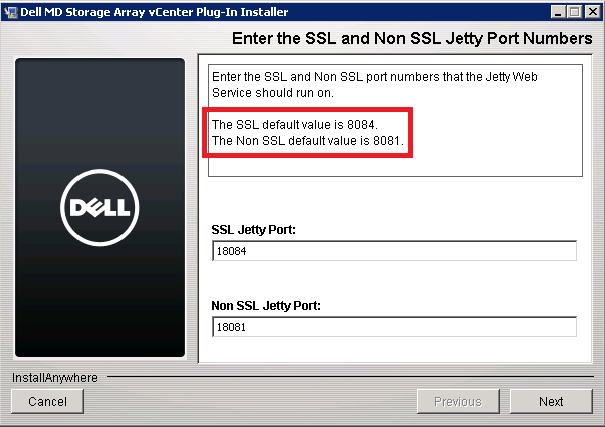

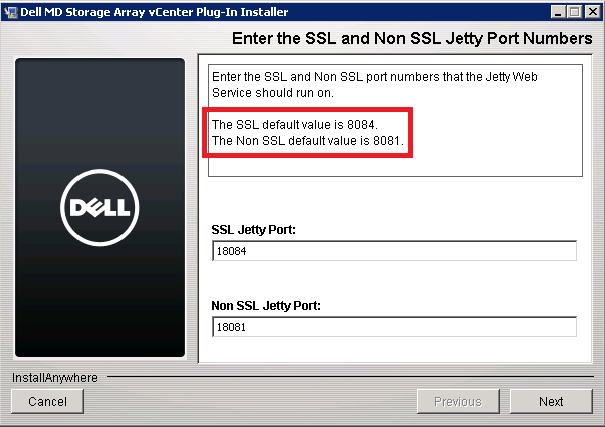

How to install Dell MD Storage Array vCenter Plug-in.

The Dell Modular Disk Storage Array vCenter Management Plug-in is a plug-in for your vCenter (doh! 🙂 ) that provides integrated management of Dell MD series of storage arrays through […]

The Dell Modular Disk Storage Array vCenter Management Plug-in is a plug-in for your vCenter (doh! 🙂 ) that provides integrated management of Dell MD series of storage arrays through […]

Some time ago, I configured a pair of Dell PowerConnect 6224 switches for iSCSI storage network and wrote a small blog post about the configuration. This time I had a […]

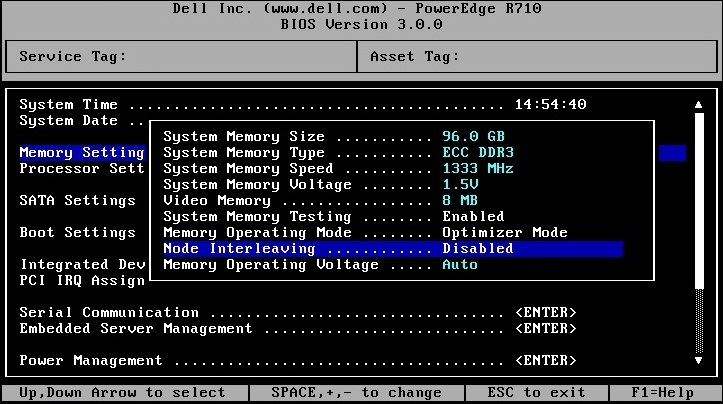

On my last project I worked once again with the Dell PowerEdge R710 servers but this time the customer followed our advice and purchased the servers with internal 2 GB […]

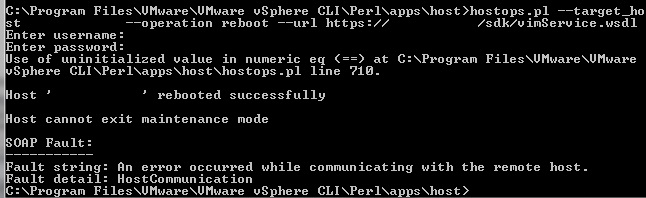

Here is a quick step-by-step guide on how to install the Dell OpenManage software on an ESXi host using the VMware vSphere Command Line interface (vSphere CLI) or the VMware […]

Recently, I was involved in a big infrastructure refreshment project for one of our customers across different locations in Europe. The old hosts were replaced with the brand new Dell […]

A few days ago I had a chance to configure a Dell EqualLogic PS4000XV iSCSI SAN connected through two Dell PowerConnect 6224 switches during a virtualization project at one of […]

Copyright © 2024 | DefaultReasoning.com