In the current release of VMware Cloud Foundation (4.3.x), if you want to create a VI workload domain with more than one vSphere Distributed Switch (vDS) or use ESXi hosts with more than two physical NICs, you must use the VMware Cloud Foundation API functionality to achieve this.

Example Scenario

Consider the following scenario that is based on one of the options that you choose when deploying the management workload domain during the VCF bring-up. This is basically the Profile-2 setting from the parameter Excel file.

- 2 vSphere Distributed Switches

- Primary vDS for Management, vMotion, and Host Overlay traffic

- Secondary vDS for vSAN traffic

- 4 physical NICs

- vmnic0 & vmnic2 assigned to vDS01

- vmnic1 & vmnic3 assigned to vDS02

- Static IP addresses for Host TEP interfaces

Based on this scenario, I assume that the ESXi hosts that will be used to create the new VI Workload Domain are already commissioned in the SDDC Manager.

Preparations

- Before you proceed, verify the prerequisites for a new VI Workload Domain in the VCF online documentation.

- Open you favorite IDE, I am using Visual Studio Code.

- Open a JSON format verification tool, I am using JSON Formatter & Validator.

- Make sure that you have sufficient licenses for vSphere, vSAN, and NSX-T available in the SDDC Manager.

Deploy VI Workload Domain using API

The VCF API uses JSON format as input for executing the APIs. The first step in creating the JSON input file is to look up the ID of the ESXi hosts that will be used for the new VI Workload Domain.

Get host IDs

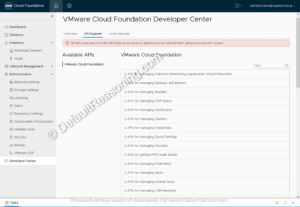

- Navigate to you SDDC Manager and log in as the administrator.

- Go to Developer Center, select the API Explorer tab, and open the APIs for managing hosts.

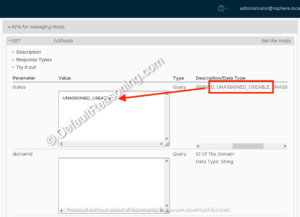

- Open the GET /v1/hosts API and copy/paste the UNASSIGNED_USABLE data type as the value in the status parameter. This will only retrieve the IDs of ESXi hosts that not assigned to a workload domain.

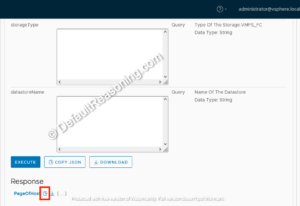

- Scroll down and click the Execute.

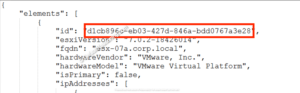

- Click the clipboard copy button next to the PageOfHost results under the Response field and past the content into a text file.

- Make a note of the “id” of each host that will be used for the new workload domain. Save the IDs. They will be used later.

Create the JSON specification

The next step is to create a JSON file that will contain all specifications for the new workload domain.

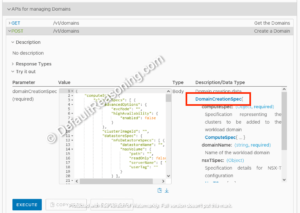

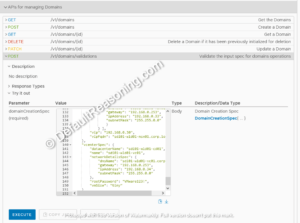

- With the SDDC Manager API explorer still open, expand the APIs for managing domains, and open the POST /v1/domains API.

- Click on the DomainCreationSpec data type to generate an example of the domain creation input.

- Copy the content into a new text file.

Note: This file contains mandatory and optional specifications. For the sake of simplicity, I will only use the required specifications. Feel free to adjust the specifications to satisfy your requirements as needed. You can find the VCF API reference in the online VMware developer documentation.

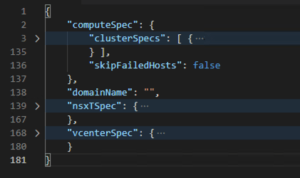

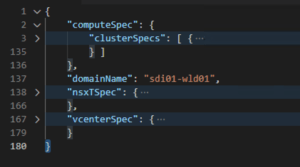

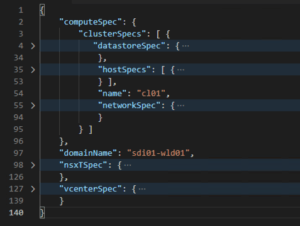

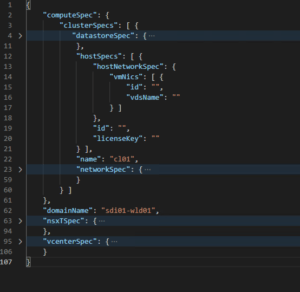

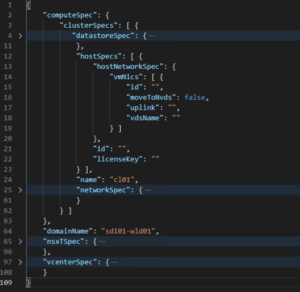

- The JSON specification can be broken into the following parts: computeSpec, nsxTSpec, vcenterSpec.

- In this cascaded section as shown above, we can remove the skipFailed hosts parameter and enter the name of the new workload domain. This is the name that will be displayed in the inventory of the SDDC Manager under Workload Domains. Make sure you remove the extra comma, so the JSON specification is correct.

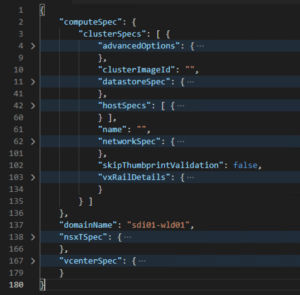

Configure clusterSpec section

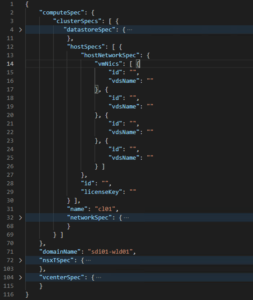

- Expand the clusterSpec section and cascade all sub-sections within.

- Remove the advancedOptions, clusterImageId, skipThumbprintValidation, and vxrailDetails from the file. Again, make sure that the JSON format is correct.

- Enter the name of the vSphere cluster in the name section.

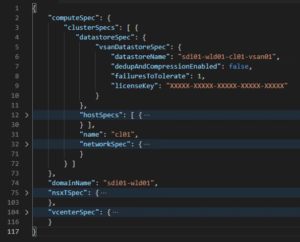

- Expand the datastoreSpec section and remove all except vsanDatastoreSpec part making sure that the JSON format is correct. Enter the vSAN datastore name, if you want to enable or disable deduplication and compression, failures to tolerate, and a valid vSAN license key.

- Next, expand the networkSpec section and cascade the vdsSpecs section for a better view.

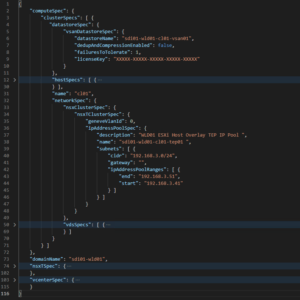

- In the nsxClusterSpec, provide the Geneve VLAN id.

- In the ipAddressPoolSpec, enter a description for the IP pool. This will be used as the description of this address pool as shown in the NSX-T manager UI. Remove the ignoreUnavailbleNsxtCluster. Enter the name for this IP pool, CIDR, default gateway, and the IP pool range.

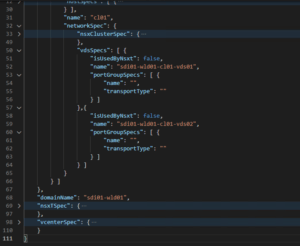

- Next, expand the vdsSpecs section and cascade the nsxClusterSpec section for a better overview.

- Remove the entire niocBandwidthAllocationSpec.

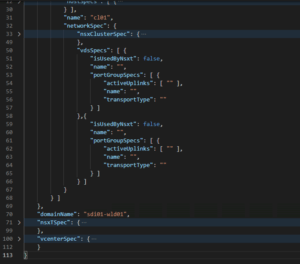

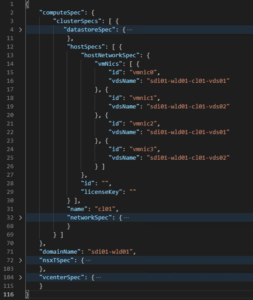

- Under vdsSpecs, copy the section containing isUsedByNsxt, name, and portGroupSpecs. This is needed as we will be creating 2 vDSs. Again, make sure that JSON format is correct. You will need to add the following line between the vDS switches specification: },{

- Remove the activeUplinks section from both switch specifications.

- Enter the name for each switch.

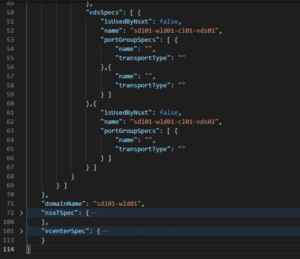

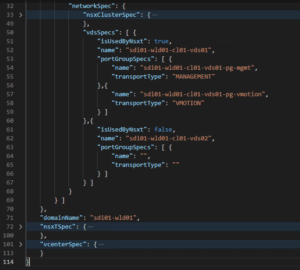

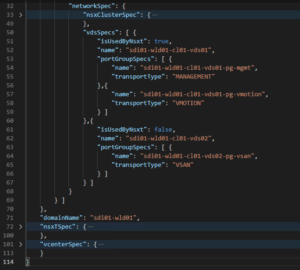

- Based on the example scenario, the primary (vDS01) switch will be used for management, vMotion, and Host Overlay traffic. The secondary (vDS02) switch is exclusively reserved for vSAN traffic. In order to configure this in the JSON specification, we need to enter the portgroup name, set the transport type, and specify if the switch is used by NSX.

- First, for vDS01, copy the entire name and transportType sections and paste it below the first one. Make sure that JSON format is correct by adding the appropriate brackets.

- Next, for vDS01, specify the management and vMotion network by entering the portgroup name that you want to use and change the isUsedByNsxt (Geneve traffic) value to true.

- For vDS02, enter the name for the vSAN portgroup and mark the transportType as vSAN.

- The vDS networking specification is now completed.

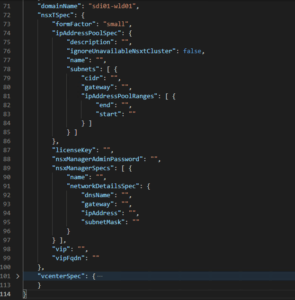

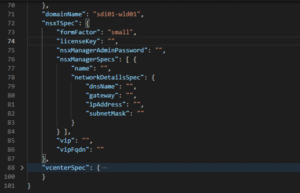

Configure nsxTSpec section

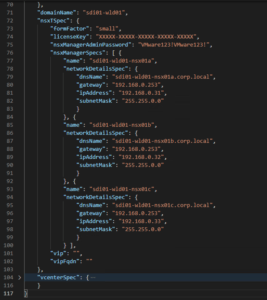

- Expand the nsxTSpec section and select appropriate formFactor for the NSX-T Manager appliances. In my case, I used small as it is a lab setup.

- Collapse the isAddressPoolSpecs section and remove it with its brackets. We already configured the IP address pool in step 12.

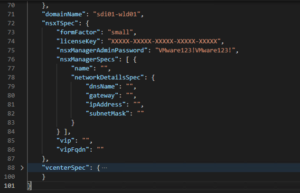

- Enter a valid NSX-T license key and the NSX-T Manager password. Keep in mind that the password must contain at least 12 characters and must be complex enough. Check the NSX-T online documentation for more details.

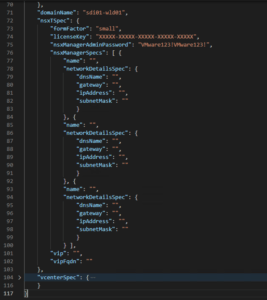

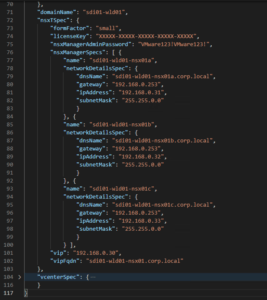

- By default, VCF deploys the NSX-T Manager cluster is with 3 appliances. Therefore, we need to copy and paste the entire name and networkDetailsSpec section two times. Each entry contains settings for one NSX-T Manager appliance. Make sure you add brackets between the node specifications as needed. Verify the JSON structure again.

- Next, specify the NSX-T Manager nodes accordingly by entering the necessary settings.

- To finish the NSX-T configuration, enter the VIP address and the VIP FQDN for the NSX-T Manager cluster.

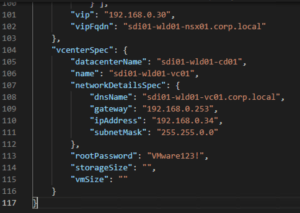

Configure vCenterSpec section

- Expand the vCenterSpec.

- Enter the datacenterName, the vCenter VM name, and specify the IP settings for the vCenter as well as the root password.

- If you need large or extra-large storage for your vCenter Server, you can specify it with the storageSize Otherwise, you can remove this setting and the vCenter Server will be deployed with its default storage configuration.

- Enter the size of the vCenter Server VM.

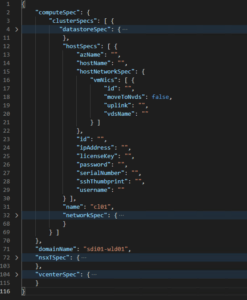

Configure hostSpec section

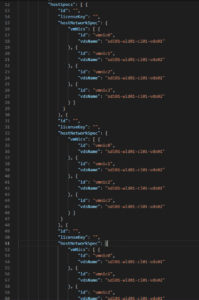

- The final set of parameters that needs to be provided in the JSON specification are the settings for the ESXi hosts. For better visibility, collapse all other sub-section and expand the hostSpecs section only.

- Remove the following parameters as they are optional:

- Under the hostNetworkSpec, remove the moveToNvds and uplink.

- Based on the example scenario, we have ESXi hosts with 4 physical NICs. This means that we must copy the id and vdsName values per vmnic. Again, add the brackets as required so the JSON format is correct.

- All ESXi hosts will be configured exactly the same so we can now map the vmnics to vDS’s.

- We will be creating the new VI workload domain with 3 ESXi host. This means that we have to copy the entire host configuration 2 more times. I also moved the id and linceseKey values to the top for each ESXi host specification to make the JSON format more readable. Make sure that you add brackets where needed and verify the JSON format.

- The final step is to enter the host IDs ‘s that we looked up earlier (under the Get Host IDs part) in the id parameter and provide a valid vSphere license key per ESXi host.

At this point, the JSON specification is completed.

Validate the JSON specification

Once the JSON specification is completed, it needs to be validated by the SDDC Manager before executing.

- Open the SDDC Manager API Explorer and expand the APIs for managing domains. Go to POST /v1/domains/validations.

- Paste the JSON specification is the value window and click Execute. Click Continue when asked if you are sure. This API will only do the validation of the JSON specification. It will not make any changes or start any deployment tasks.

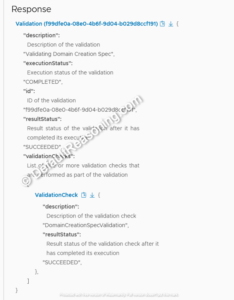

- Wait until the validation process is completed.

- Under the Response section, click the validation response and verify that it was completed successfully.

If the validation fails, fix the errors and retry the validation again.

Execute the JSON specification

Now that the JSON specification is verified by the SDDC Manager, it can finally be executed to create a new VI workload domain.

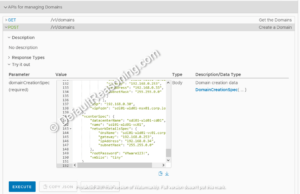

- With the API Explorer still open, go to APIs for managing domains and expand the POST /v1/domain section.

- Paste the JSON specification into the value window.

- Remove the domainCreationSpec part from the JSON file and delete the first and last accolade. Make sure that JSON format is OK.

- Click the Execute button and confirm the execution of the API call.

- The Response section will show the task id and the task being in progress.

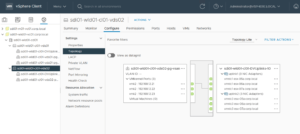

You can monitor the domain creation steps in the SDDC Manager tasks pane. Depending on the speed of your hardware, the deployment process takes around 3 hours. The final configuration of the NIC’s and vDS’s looks as follow in the vCenter Server.

This concludes the deployment of a VI workload domain with multiple physical NICs and 2 vSphere Distributed Switches using SDDC Manager API.

Cheers!

– Marek.Z

Be the first to comment